Introduction¶

Build your own insect-detecting camera trap!

This website provides instructions on hardware assembly, software setup, model training and deployment of the Insect Detect camera trap that can be used for automated insect monitoring.

Background¶

Long-term monitoring data at a high spatiotemporal resolution is essential to investigate potential drivers and their impact on the widespread insect decline (Wagner, 2020), as well as to design effective conservation strategies (Samways et al., 2020). Automated monitoring methods can extend the ecologists' toolbox and acquire high-quality data with less time/labor input compared to traditional methods (Besson et al., 2022). If standardized, easily accessible and reproducible, these methods could furthermore decentralize monitoring efforts and strengthen the integration of independent biodiversity observations (Citizen Science) (Kühl et al., 2020).

A range of different sensors can be used for automated insect monitoring (van Klink et al., 2022). These include acoustic (e.g. Kawakita & Ichikawa, 2019) and opto-electronic sensors (e.g. Potamitis et al., 2015; Rydhmer et al., 2022), as well as cameras (overview in Høye et al., 2021). Several low-cost DIY camera trap systems for insects use scheduled video or image recordings, which are analyzed in a subsequent processing step (e.g. Droissart et al., 2021; Geissmann et al., 2022). Other systems utilize motion detection software as trigger for the image capture (e.g. Bjerge et al., 2021a; overview in Pegoraro et al., 2020). As for traditional camera traps used for monitoring of mammals, the often large amount of image data that is produced in this way can be most efficiently processed and analyzed by making use of machine learning (ML) and especially deep learning (DL) algorithms (Borowiec et al., 2022) to automatically extract information such as species identity, abundance and behaviour (Tuia et al., 2022).

Small DL models with relatively low computational costs can be run on suitable devices on the edge, to enable real-time detection of objects the model was trained on. Bjerge et al. (2021b) developed a camera trap for automated pollinator monitoring, which combines scheduled time-lapse image recordings with subsequent on-device insect detection/classification and tracking implemented during post-processing. The appearance and detection of an insect can be also used as trigger to automatically start a recording. This can drastically reduce the amount of data that has to be stored, by integrating the information extraction into the recording process. The hereafter presented DIY camera trap supports on-device detection and tracking of insects, combined with high-resolution frame synchronization in real time.

The necessity of automated biodiversity monitoring

"We believe that the fields of ecology and conservation biology are in the midst of a rapid and discipline-defining shift towards technology-mediated, indirect biodiversity observation. [...] Finally, for those who remain sceptical of the value of indirect observations, it is also useful to remember that we can never predict the advances in methods that may occur in the future. Unlike humans in the field, automated sensors produce a permanent visual or acoustic record of a given location and time that is far richer than a simple note that 'species X was here at time Y'. Similar to museum specimens, these records will undoubtedly be reanalysed by future generations of ecologists and conservation biologists using better tools than we have available now in order to extract information and answer questions that we cannot imagine today. And these future researchers will undoubtedly thank us, as we thank previous generations of naturalists, for having the foresight to collect as many observations as possible of the rapidly changing species and habitats on our planet." (Kitzes & Schricker, 2019)

Overview¶

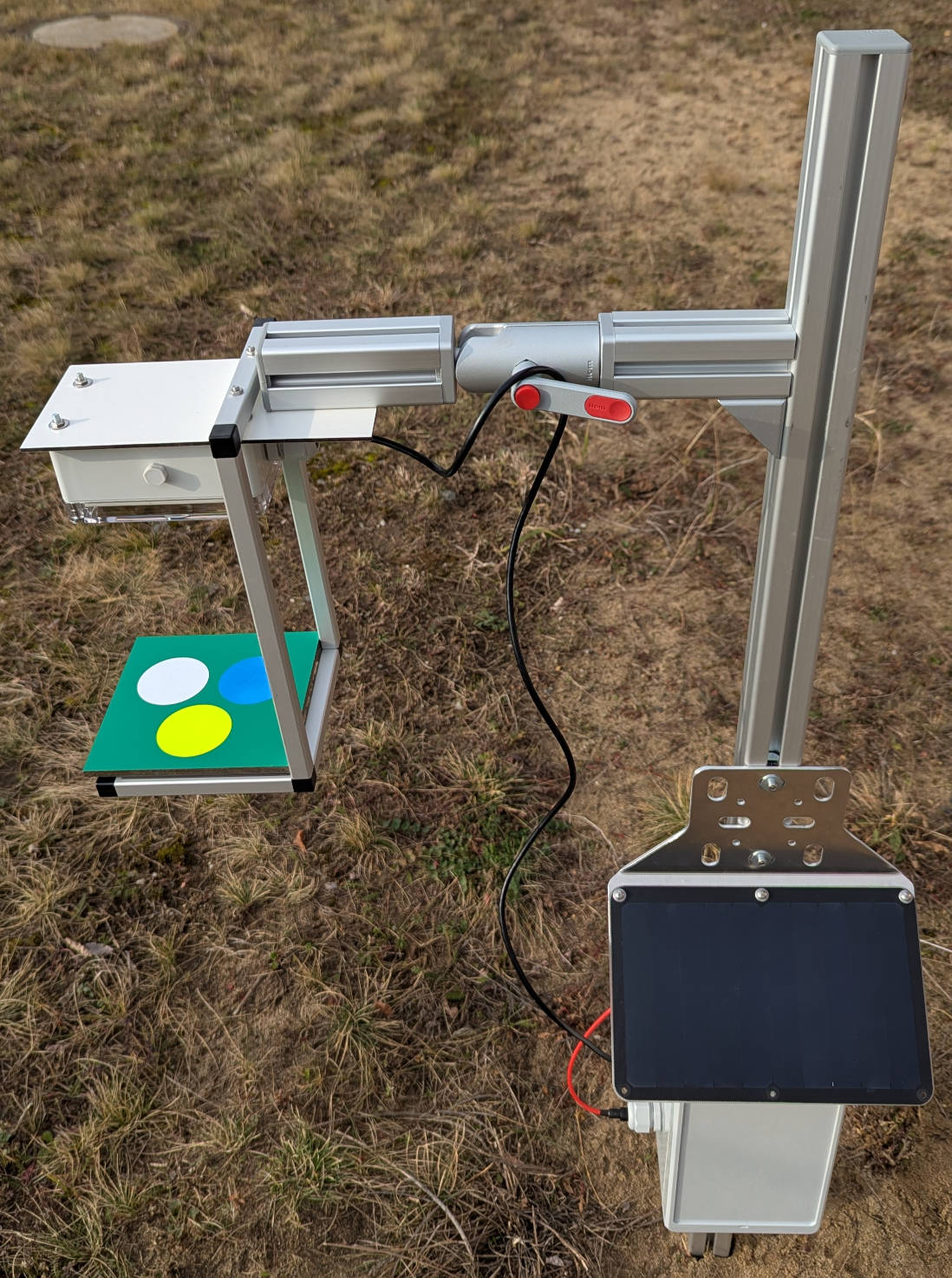

The Insect Detect DIY camera trap for automated insect monitoring is composed of low-cost off-the-shelf hardware components, combined with open-source software and can be easily assembled and set up with the provided instructions. The annotated datasets and provided models for insect detection and classification can be used as starting point to train your own models, e.g. adapted to different backgrounds or insect taxa.

The use of an artificial platform provides a homogeneous and constant background, which standardizes the visual attraction for insects and leads to higher detection and tracking accuracy with less data required for model training. Because of the flat design, the posture of insects landing on the platform is more uniform, which can lead to better classification results and less images required for model training. In ongoing research efforts, different materials, shapes and colors are tested to enhance the visual attraction for specific pollinator groups.

Implemented functions

- non-invasive, continuous automated monitoring of flower-visiting insects

- standardized artificial flower platform as visual attractant

- on-device detection and tracking in real time with provided YOLO models

- save images of detected insects cropped from high-resolution frames (4K)

- low power consumption (~3.8 W) and fully solar-powered

- automated classification and post-processing in subsequent step on local PC

- weatherproof enclosures and highly customizable mounting setup

- easy to build and deploy with low-cost off-the-shelf hardware components

- completely open-source software with detailed documentation

- instructions and notebooks to train and deploy custom models

Not implemented (yet)

- on-device classification and metadata post-processing

- remote data transfer (e.g. via NB-IoT or LTE module)

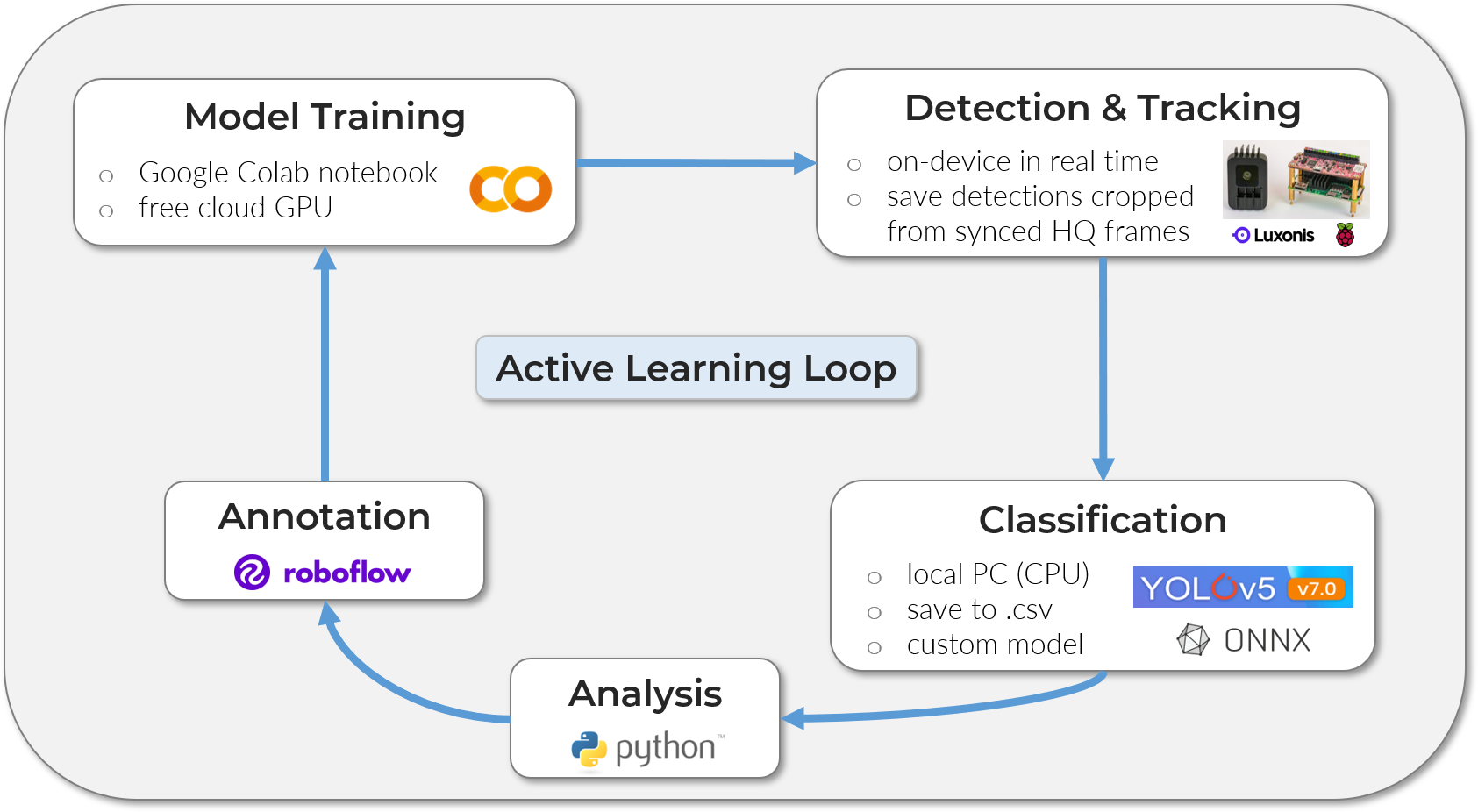

Especially when deploying the camera trap system in new environments, edge cases (low confidence score or false detection/classification) should be identified and models retrained with new data (correctly annotated images). This iterative Active Learning loop of retraining and redeploying can ensure a high detection and classification accuracy over time. With the combination of Roboflow for annotation and dataset management and Google Colab as cloud training platform, this can be achieved in a straightforward way, even without prior knowledge or specific hardware requirements and free of charge.

In the Hardware section of this website, you will find a list with all required components and detailed instructions on how to assemble the camera trap system. Only some standard tools are necessary, which are listed in the Hardware overview.

In the Software section, all steps to get the camera trap up and running are explained. You will start with installing the necessary software on your local PC, to communicate with the Raspberry Pi. After the Raspberry Pi is configured, check the usage instructions for information on custom configuration and how to use the scripts for live preview and automated recording.

The Model Training section will show you tools to annotate your own images and use these to train your custom object detection models that can be deployed on the OAK-1 camera. To classify the cropped insect images, you can train your custom image classification model in the next step that can be run on your local PC (no GPU necessary). All of the model training can be done in Google Colab, where you will have access to a free cloud GPU for fast training. This means all you need is a Google account, no special hardware is required.

The Deployment section contains details about each step of the processing pipeline, from on-device detection and tracking, to classification of the cropped insect images on your local PC and subsequent metadata post-processing of the combined results.

GitHub repositories¶

-

YOLO insect detection models and Python scripts for testing and deploying the Insect Detect DIY camera trap system for automated insect monitoring.

-

Notebooks for object detection and image classification model training. Insect classification model. Python scripts for data post-processing.

-

Source files and assets of this documentation website, based on Material for MkDocs.

-

YOLOv5 fork with modifications to improve classification model training, validation and prediction. Adapted to data captured with the Insect Detect DIY camera trap.

Datasets¶

-

Dataset to train insect detection models. Contains annotated images collected in 2022 with the DIY camera trap and the first version of the flower platform as background.

-

Dataset to train insect classification models. Contains images mostly collected in 2023 with several DIY camera traps.

Models¶

Detection models¶

| Model | size (pixels) |

mAPval 50-95 |

mAPval 50 |

Precisionval |

Recallval |

SpeedOAK (fps) |

|---|---|---|---|---|---|---|

| YOLOv5n | 320 | 53.8 | 96.9 | 95.5 | 96.1 | 49 |

| YOLOv6n | 320 | 50.3 | 95.1 | 96.9 | 89.8 | 60 |

| YOLOv7tiny | 320 | 53.2 | 95.7 | 94.7 | 94.2 | 52 |

| YOLOv8n | 320 | 55.4 | 94.4 | 92.2 | 89.9 | 39 |

Table Notes

- All models were trained to 300 epochs with batch size 32 and default hyperparameters. Reproduce the model training with the provided Google Colab notebooks.

- Trained on Insect_Detect_detection dataset version 7 with only 1 class ("insect").

- Model metrics (mAP, Precision, Recall) are shown for the original PyTorch (.pt) model before conversion to ONNX -> OpenVINO -> .blob format. Reproduce metrics by using the respective model validation method.

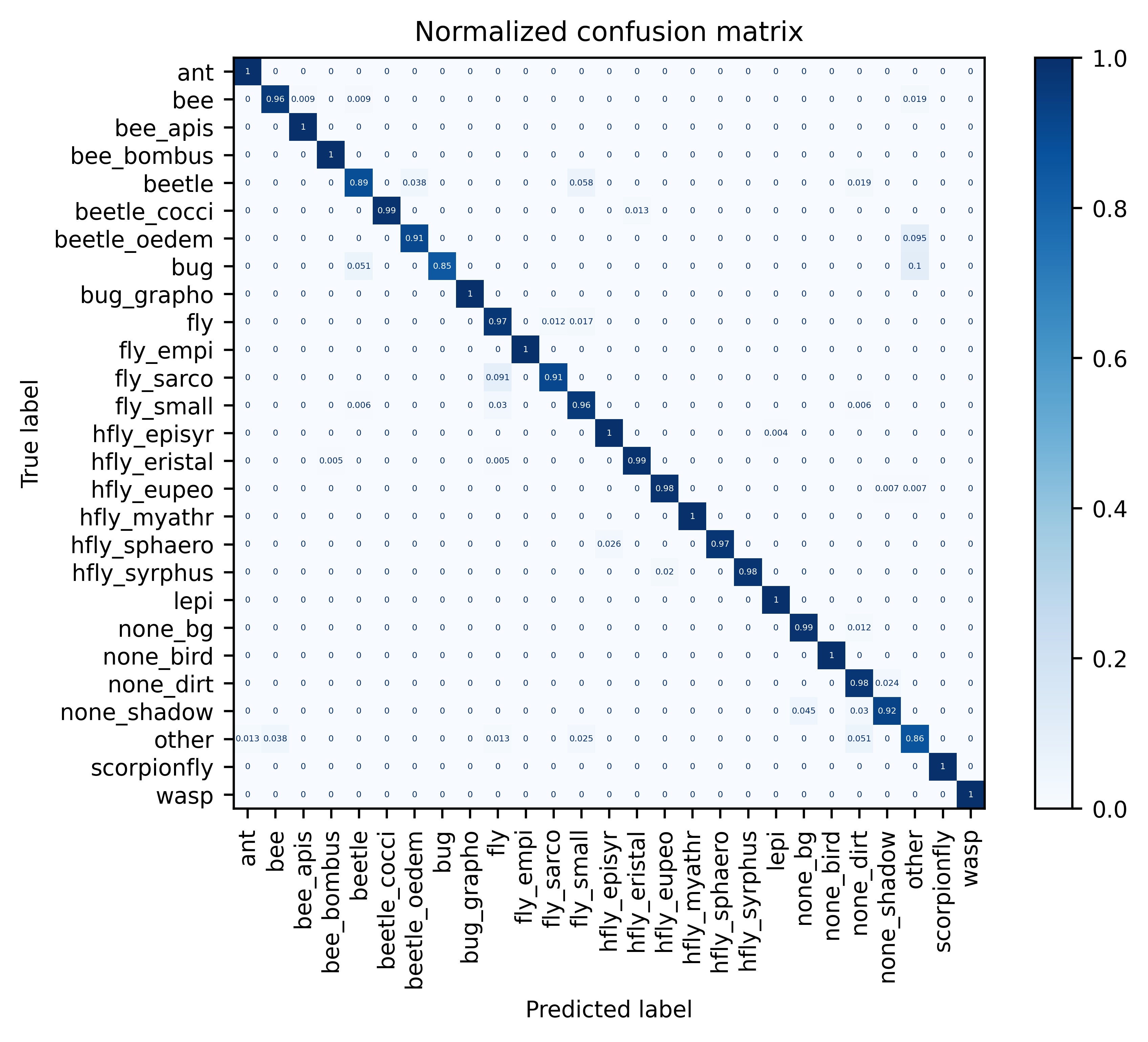

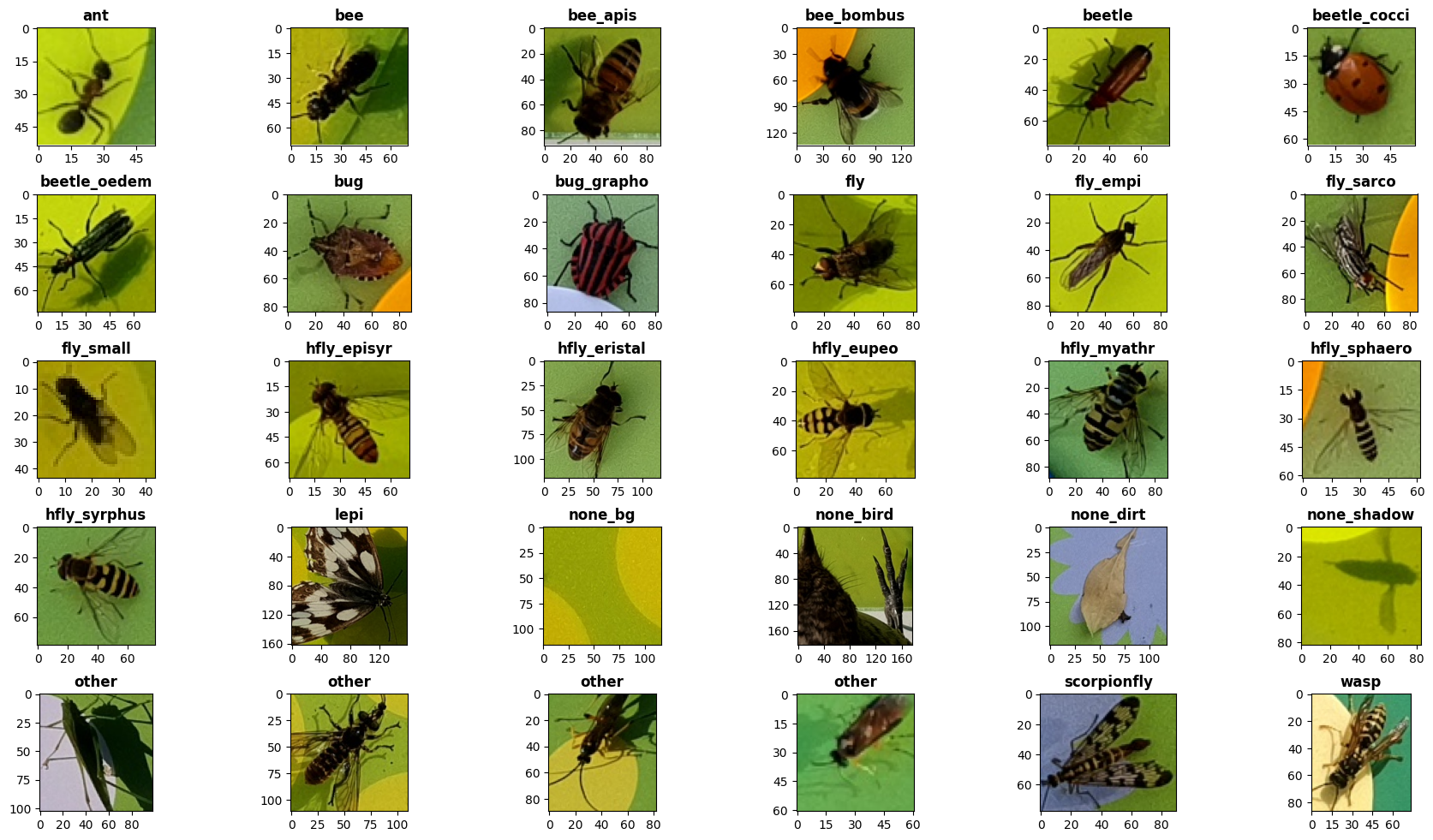

Classification model¶

| Model (.onnx) |

size (pixels) |

Top1 Accuracytest |

Precisiontest |

Recalltest |

F1 scoretest |

|---|---|---|---|---|---|

| EfficientNet-B0 | 128 | 0.972 | 0.971 | 0.967 | 0.969 |

Table Notes

- The model was trained to 20 epochs with image size 128, batch size 64 and default settings and hyperparameters. Reproduce the model training with the provided Google Colab notebook.

- Trained on Insect Detect - insect classification dataset v2 with 27 classes. To reproduce the dataset split, keep the default settings in the Colab notebook (train/val/test ratio = 0.7/0.2/0.1, random seed = 1).

- Dataset can be explored at Roboflow Universe. Export from Roboflow compresses the images and can lead to a decreased model accuracy. It is recommended to use the uncompressed dataset from Zenodo.

- Full model metrics are available in the

insect-detect-mlGitHub repo.

Licenses¶

This documentation website and its content is licensed under the terms of the Creative Commons Attribution-ShareAlike 4.0 International License (CC BY-SA 4.0).

Resources from the insect-detect

GitHub repository are licensed under the terms of the GNU General Public License v3.0

(GNU GPLv3).

Resources from the

insect-detect-ml

GitHub repository and yolov5

fork are licensed under the terms of the GNU Affero General Public License v3.0

(GNU AGPLv3).

Citation¶

If you use resources from this project, please cite our paper: